Security Telemetry Pipelines: The Missing Link in Modern Security Architecture

In today's rapidly evolving cybersecurity landscape, security teams face unprecedented challenges. They're dealing with exponential growth of security data, alert fatigue from increasingly sophisticated adversaries, and a critical shortage of skilled analysts.

The goal for security operations centers (SOC) has remained largely the same: lower MTTD (Mean Time to Detect) and MTTR (Mean Time to Respond), reduce false positives, and scale as needed. However, achieving these goals has become exponentially more difficult.

In this context, security telemetry pipelines aren't just enhancements—they're becoming a necessity. In a world where terabytes of data flood your SIEM daily, real-time context (e.g., reasoning and detection logic with security ontologies) and intelligent filtering can no longer be optional.

The Current State: Challenges with Traditional Approaches

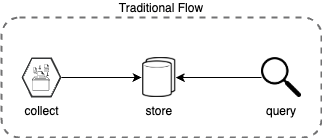

Traditional SIEM architectures follow a simple pattern:

While this approach worked in the past, it's becoming increasingly inadequate for modern security challenges:

- Data Volume: Even smaller organizations generate terabytes of security data daily

- Alert Fatigue: A large percentage of alerts are false positives, overwhelming security teams

- Real-time Requirements: Threats evolve faster than batch processing can handle

- Cost Inefficiency: Storing everything without intelligent filtering is expensive

- Vendor Lock-in: Vendors tend to store data in proprietary formats, making extensibility more difficult

Security Telemetry Pipelines: The Missing Middle Layer

Overview

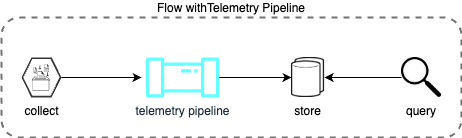

To address these challenges, we need the telemetry pipeline layer that sits between data collection and storage.

This layer should:

- Transform data into standardized formats (e.g. OCSF, OpenTelemetry)

- Filter out noise and irrelevant events

- Enrich data with threat intelligence and related context

- Aggregate related events for better context

- Apply rules for real-time detection with security ontologies (e.g. MITRE ATT&CK)

- Utilize AI Agents for advanced use-cases, reasoning, and efficiency

| Phase | Traditional Flow | With Telemetry Pipeline |

|---|---|---|

| Collection | Syslog, API, agents | Syslog, API, agents |

| Processing | N/A | Transform, Filter, Aggregate, Detect, Agentic AI Flows, etc. |

| Store/Query | SIEM/EDR/SOAR | SIEM/EDR/SOAR |

Key Features

- Real-time Transformation by converting diverse data formats into standardized schemas such as OCSF and OpenTelemetry

- Intelligent Filtering by reducing noise (filter benign events, remove duplicates), separating regulation and security events (if necessary), etc.

- Streaming Aggregation to build context from multiple events

- Real-time Detection Rules to apply event-based and temporal detection logic on streaming data

- AI Agent utilization to improve pipeline processing such as anomaly detections, automatic OCSF mapping, behavioral analytics, context enrichment, etc.

Key Benefits

- Reduce costs through optimized data ingestion and storage

- Boost efficiency via automated data enrichment and alert prioritization

- Lower MTTD & MTTR with real-time detections and intermediary findings

- Enhance threat detection with holistic data analysis

- Scale operations to meet complex environment demands

- Avoid vendor lock-in with flexible, vendor-agnostic approaches and open-data formats

Open Data Formats and Standards

Currently we have 2 promising standards in the industry: OCSF (Open Cybersecurity Schema Framework) for security events and OpenTelemetry for observability.

In terms of information security related schema standards, there have been other attempts but it seems like OCSF (Open Cybersecurity Schema Framework) is taking the lead on how we handle security data by providing a vendor-neutral standard to follow.

Some Benefits of OCSF and OpenTelemetry:

- Standardized Schema: Consistent data format across all sources

- Vendor Agnostic: No lock-in to specific security tools

- Interoperability: Seamless integration between different systems

- Extensibility: Easy to add custom fields and attributes

Such standards allow vendors to develop generic solutions that are not tied to a specific vendor, hence the industry and related solution sets will improve in time.

Here's a practical example showing how a telemetry pipeline transforms CEF (Common Event Format) data into standardized OCSF format:

Before (CEF Format):

CEF:0|Symantec|Endpoint Protection|6.1.2|1001|User Login|Medium|

msg=User john.doe logged in from 192.168.1.100|

duser=john.doe|src=192.168.1.100|deviceExternalId=workstation-01

After (OCSF Format):

{

"activity_id": "evt_12345_20241201_143022",

"activity_name": "User Login",

"category_uid": 1,

"class_uid": 1001,

"severity_id": 2,

"severity": "Medium",

"time": 1701441022000,

"user": {

"name": "john.doe",

"uid": "12345",

"type": "User Account",

"domain": "corp.local"

},

"device": {

"name": "workstation-01",

"ip": "192.168.1.100",

"type": "Workstation",

"os": "Windows 11",

"location": "HQ-Building-A"

},

"metadata": {

"product": {

"name": "Symantec Endpoint Protection",

"version": "6.1.2"

},

"original_format": "CEF",

"transformation_timestamp": 1701441023000

}

}

Key Benefits of This Transformation:

- Standardized Schema: Consistent field names and data types across all sources

- Enhanced Context: Additional metadata and enrichment data

- Vendor Agnostic: No longer tied to vendor specific format

- Searchable: Structured data enables better querying and correlation

AI Agents on Streaming Security Data

We need to incorporate AI-based solutions into telemetry pipelines as well. Following principles of high cohesion and low coupling, it makes sense to implement AI agents working together for the following solutions:

- Real-time threat analysis and anomaly detection

- Predictive analytics by identifying potential threats before they materialize

- Streamline operations via automated/assisted standards mappings (i.e. OCSF and OpenTelemetry)

- Reduced false positives when compared to traditional SIEM rule-engines

- Faster response times with automated analysis and data enrichment

Implementation Considerations

Technology Stack

For a robust security telemetry pipeline, at the very least we need a Streaming Platform and a Processing Engine.

Streaming Platform: My personal choice would be Apache Kafka for high-throughput data streaming since it has been proven to be the de facto standard streaming solution for many enterprises with a huge open-source community. In addition, for organizations that require commercial support, Confluent (and some other vendors) provides licensed and enterprise-supported distributions with additional features.

Processing Engine: There are many solutions that work in this space. You can also build an application that runs on Apache Flink or Kafka Streams for real-time processing. For our purposes, we're developing Padas to address the mentioned challenges.

The Road Ahead

Decentralized security models, such as SOCless architectures, seem to be gaining popularity in discussions about the future of information security. Telemetry pipelines play a central role in modernizing security operations that utilize decentralized architectures.

One approach would be building data once, building it right, and reusing it anywhere within milliseconds of its creation. This approach in combination with security telemetry pipelines will become essential for:

- Providing faster detection and response with real-time processing and correlation

- Reducing operational costs through intelligent data management

- Eliminating data quality and consistency issues at the source

- Reducing duplicative processing and associated costs

- Improving security outcomes with better context and correlation

- Enabling event-driven architecture for proactive security measures

The future belongs to organizations that can process security data intelligently, not just store it. Security telemetry pipelines are the key to unlocking this future.

*Ready to transform your security operations? At Padas, we've been building a flexible pipeline platform inspired by these principles.

Reach out if you're exploring this path or have questions about modernizing your security architecture.

Tags: #SecurityPipeline #Telemetry #Kafka #SIEM #OCSF #OpenTelemetry #AIAgents #Cybersecurity