Buffer Overflows: Why Old Bugs Still Matter

After 25 years in information security, I've seen my fair share of vulnerabilities come and go. There's one classic that never seems to fade away: the humble buffer overflow. From my early days writing C/C++ code and hunting down memory leaks, to performing white hat hacking and teaching security training with sample overflow exploits, I've witnessed firsthand how this seemingly simple memory management issue can cause major problems. Buffer overflows aren't just academic exercises or relics from the 1990s; they're still very much alive in today's high-performance systems, and they're particularly relevant when building software that need to handle millions of events per second reliably.

A buffer overflow happens when a program writes more data into memory than the allocated space allows. Instead of stopping, the excess data spills into adjacent memory. That corruption can cause crashes, data leaks, or — in the most dangerous cases — remote code execution.

This isn't just theoretical. While the Morris Worm (1988), Code Red (2001), and Slammer (2003) are famous historical examples, buffer overflows continue to plague modern systems. Recent years have seen critical vulnerabilities like CVE-2021-44228 (Log4Shell), CVE-2022-0847 (Dirty Pipe), and numerous buffer overflow CVEs affecting everything from network devices to web browsers.

Why does it still happen? Mostly in C and C++ code, where developers manage memory manually. Without built-in bounds checking, even a single strcpy() or gets() call can turn into an attack vector. Despite decades of mitigations, the National Vulnerability Database continues to catalog hundreds of buffer overflow vulnerabilities each year, proving that this "classic" vulnerability remains very much alive.

TL;DR

Buffer overflows remain a critical vulnerability in systems programming. While C/C++ offer raw performance, their manual memory management creates significant security risks. Modern alternatives like managed languages provide safety but introduce performance overhead through garbage collection. The ideal solution combines systems-level performance with compile-time memory safety guarantees.

Key Takeaways:

- Buffer overflows are still relevant in 2025, especially in security-critical systems

- Performance vs. safety is a false dichotomy in modern systems programming

- High-throughput systems require both speed and memory safety

How Programs Use Memory: Stack vs Heap

Programs don’t just “run.” They use memory in structured ways. The two most important regions for buffer overflows are the stack and the heap.

| Aspect | Stack | Heap |

|---|---|---|

| Allocation | Automatic (by compiler) | Manual (malloc/free) |

| Size | Smaller | Larger |

| Flexibility | Fixed-size | Resizable |

| Safety | Thread-safe, private to fn | Shared, riskier |

| Use Cases | Local variables, arrays | Dynamic data, linked structs |

- The stack is like a neat stack of plates: fixed size, efficient, but inflexible.

- The heap is like a warehouse: flexible, but requires careful bookkeeping.

Both areas can be corrupted if programs don’t respect memory boundaries.

Buffer Overflow in Action

Stack Buffer Overflow

A stack overflow happens when writing beyond a local variable’s allocated space.

#include <stdio.h>

#include <string.h>

void vulnerable() {

char buf[8];

gets(buf); // unsafe: no bounds check

}

int main() { vulnerable(); }

If the user types more than 8 characters, the input overwrites memory on the stack — potentially including the function’s return address. With crafted input, an attacker can hijack program execution.

Heap Buffer Overflow

Heap overflows target dynamically allocated memory.

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

int main() {

char *buf = malloc(8);

strcpy(buf, "AAAAAAAAAAAA"); // 12 chars into 8

free(buf);

}

Here, memory corruption can damage heap metadata or adjacent chunks, leading to crashes or even arbitrary code execution.

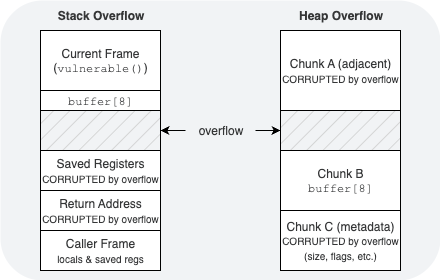

Visualizing the Problem

STACK OVERFLOW (grows downward) HEAP OVERFLOW (grows upward)

+------------------------------+ +------------------------------+

| Current Frame (vulnerable()) | | [Chunk A] (adjacent) |

| - buffer[8] ████████ | | +----------------------+ |

| ████████ | | | CORRUPTED DATA | |

| ████████ | | | (overwritten) | |

| <<< OVERFLOW >>>>>>>>>>>>>>> | | +----------------------+ |

| A A A A A A A A A A A A A A | (excess input) | ↑ OVERFLOW writes here |

+------------------------------+ +------------------------------+

| Saved Registers | | [Chunk B] (our buffer) |

| - CORRUPTED by overflow | | +----------------------+ |

+------------------------------+ | | buf (size 8) | |

| Return Address | | | AAAAAAAAAAAA | |

| - CORRUPTED by overflow | | | (12 chars in 8) | |

+------------------------------+ | +----------------------+ |

| Caller Frame | +------------------------------+

| - locals | | [Chunk C] (metadata) |

| - saved regs | | +----------------------+ |

+------------------------------+ | | CORRUPTED METADATA | |

| | (size, flags, etc.) | |

| +----------------------+ |

+------------------------------+

- Stack overflow: extra bytes overwrite control data (saved regs / return address) → control-flow hijack.

- Heap overflow: extra bytes spill into neighboring heap metadata/data → heap corruption, potential code execution.

In both cases, the root cause is the same: trusting input without enforcing limits.

Programming Language Comparison

Different languages handle memory safety in different ways. That choice determines how vulnerable they are to buffer overflows.

Execution Model

| Language Type | Examples | Performance | Notes |

|---|---|---|---|

| Compiled | C, C++, Rust, Go | 🔥 Fast | Runs directly on CPU |

| Interpreted | Python, Ruby | 🐢 Slow | Easy to use, slower |

| JIT/Hybrid | Java, C#, JS(V8) | ⚡ Medium–Fast | Runtime optimizations |

Memory Safety

| Language | Memory Safety | Buffer Overflow Risk | How Safety Is Enforced |

|---|---|---|---|

| C / C++ | ❌ Unsafe | High | Manual checks required |

| Java | ✅ Safe | Low | Runtime bounds checks |

| Python | ✅ Safe | Low | No direct memory access |

| Go | ✅ Safe | Low | Bounds checks + garbage collection |

| Rust | ✅ Safe (by design) | Very Low | Ownership & borrow checker at compile time |

Garbage Collection: Pros & Cons

Garbage collection (GC) automatically frees unused memory in languages like Java, Python, and Go.

- Pros: Improves safety, reduces leaks, boosts developer productivity.

- Cons: GC pauses introduce latency, which is painful in real-time pipelines or low-latency systems.

That tradeoff matters in systems like security event processing pipelines (think Splunk, Kafka, or Elasticsearch), where both throughput and reliability are critical.

Conclusion

Buffer overflows are one of the oldest vulnerabilities in computing — but they remain relevant. They remind us that performance without safety is fragile, while safety without performance can’t keep up with modern demands.

For high-throughput, security-critical systems like event processing pipelines, the future lies in languages and runtimes that deliver both systems-level performance and strong memory safety guarantees. This is especially critical when processing millions of security events per second, where a single memory corruption could compromise the entire data pipeline. It’s not enough to choose between speed or safety anymore. We need both.

References

- [1] GeeksforGeeks. (2025). Stack vs Heap Memory Allocation. GeeksforGeeks. https://www.geeksforgeeks.org/stack-vs-heap-memory-allocation/

- [2] OWASP Foundation. (2025). Buffer Overflow. OWASP. https://owasp.org/www-community/vulnerabilities/Buffer_Overflow

- [3] MITRE Corporation. (2025). CWE-120: Classic Buffer Overflow. MITRE CWE. https://cwe.mitre.org/data/definitions/120.html

- [4] Wikipedia Contributors. (2025). Morris Worm. Wikipedia. https://en.wikipedia.org/wiki/Morris_worm

- [5] Wikipedia Contributors. (2025). Code Red (Computer Worm). Wikipedia. https://en.wikipedia.org/wiki/Code_Red_(computer_worm)

- [6] Microsoft Corporation. (2002). MS02-039: Buffer Overrun in SQL Server 2000 Resolution Service Could Enable Code Execution. Microsoft Security Bulletin. https://learn.microsoft.com/en-us/security-updates/securitybulletins/2002/ms02-039